research

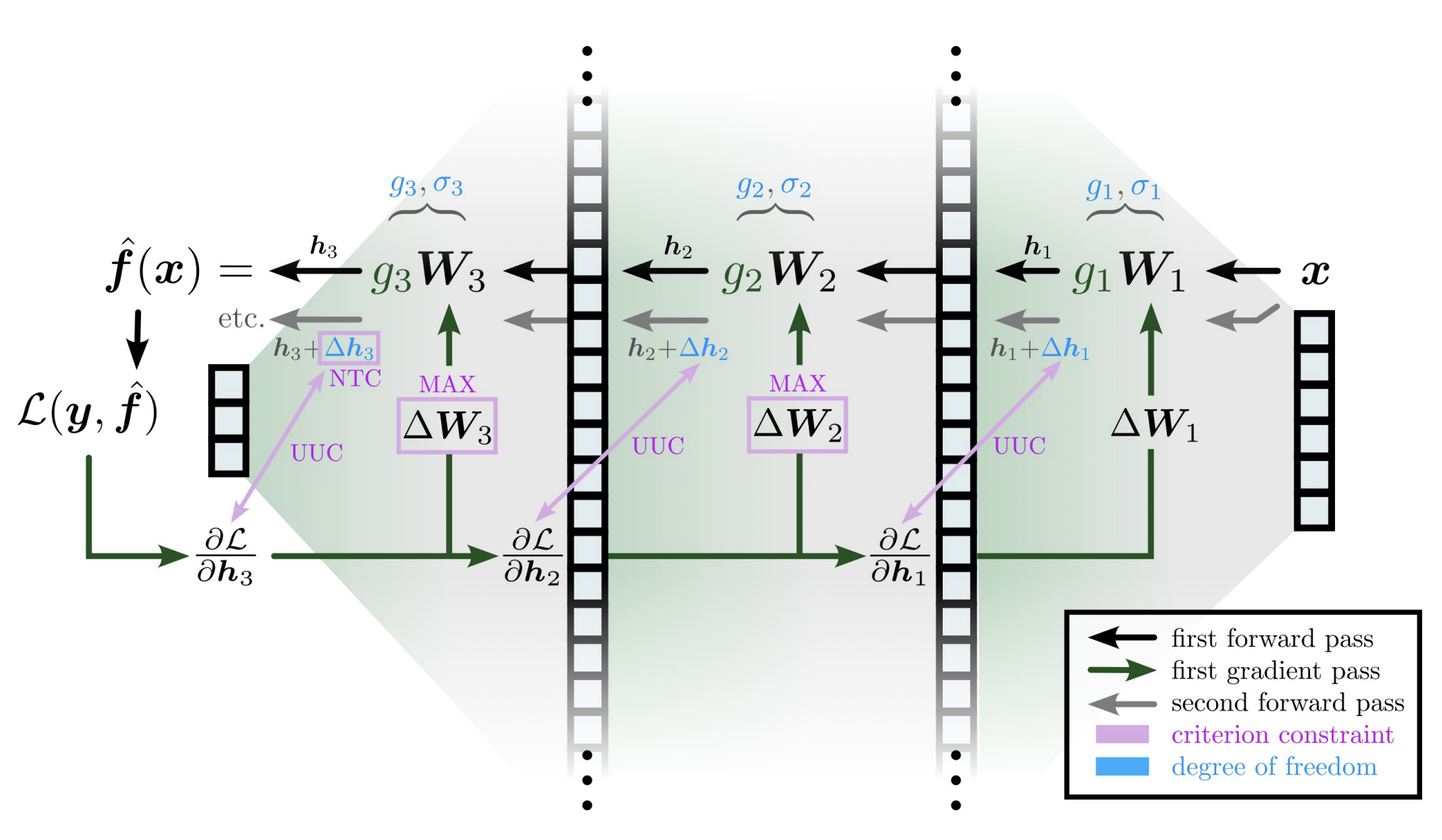

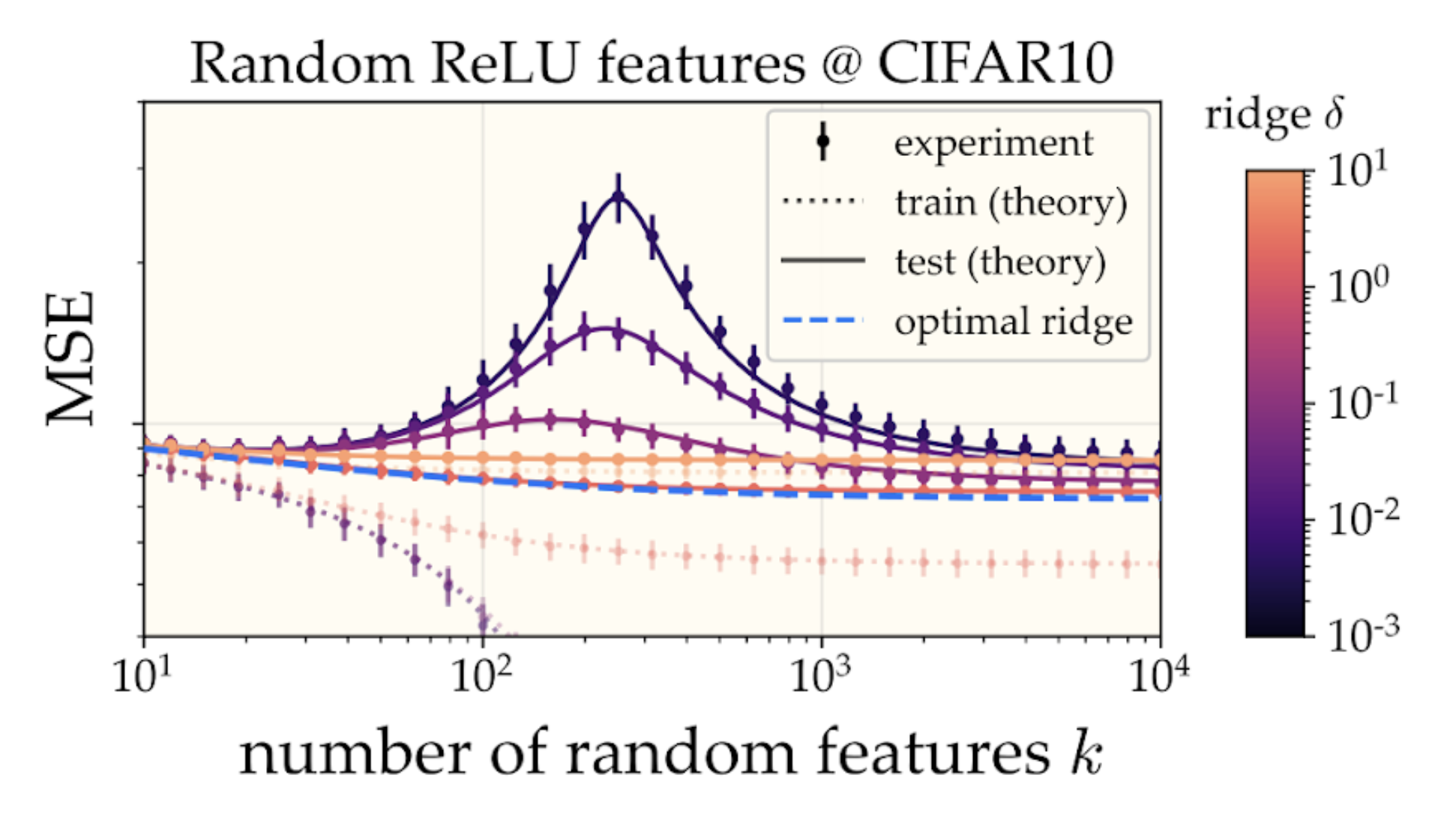

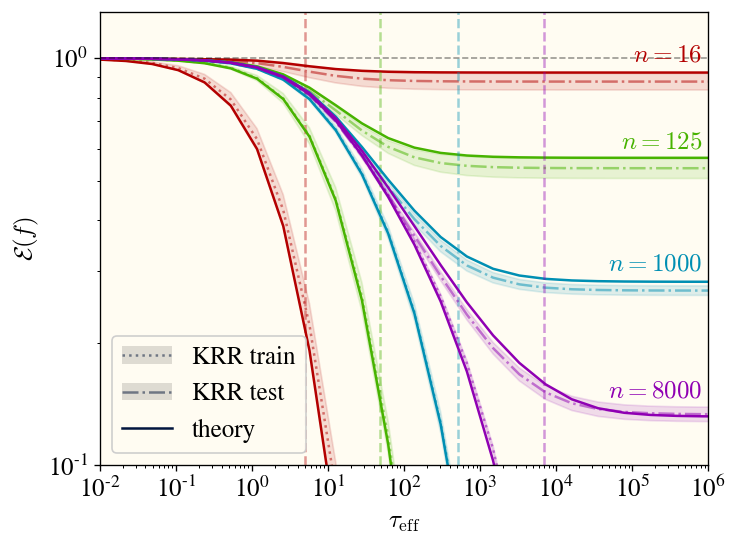

Deep learning is humanity’s most successful attempt thus far to imitate human intelligence. Despite fundamental differences between deep learning systems and biological brains, deep learning remains a theoretically- and experimentally-accessible playground for understanding learning as a general phenomenon. Even as a toy model of learning, deep learning is itself mysterious in many ways. Experiments reveal many interesting behaviors (e.g. feature learning, neural scaling laws, emergent abilities) which are poorly understood from a theory standpoint. In particular, I’m interested the large-learning-rate phenomena associated with feature learning (e.g., dynamics of the local loss geometry, representation alignment, and edge-of-stability behavior) and I hope to understand why deep learning is more sample efficient than kernel machines.

My advisor is Michael DeWeese and I’m affiliated with the Berkeley AI Research group and the Redwood Center for Theoretical Neuroscience. Find a list of my recent work below.